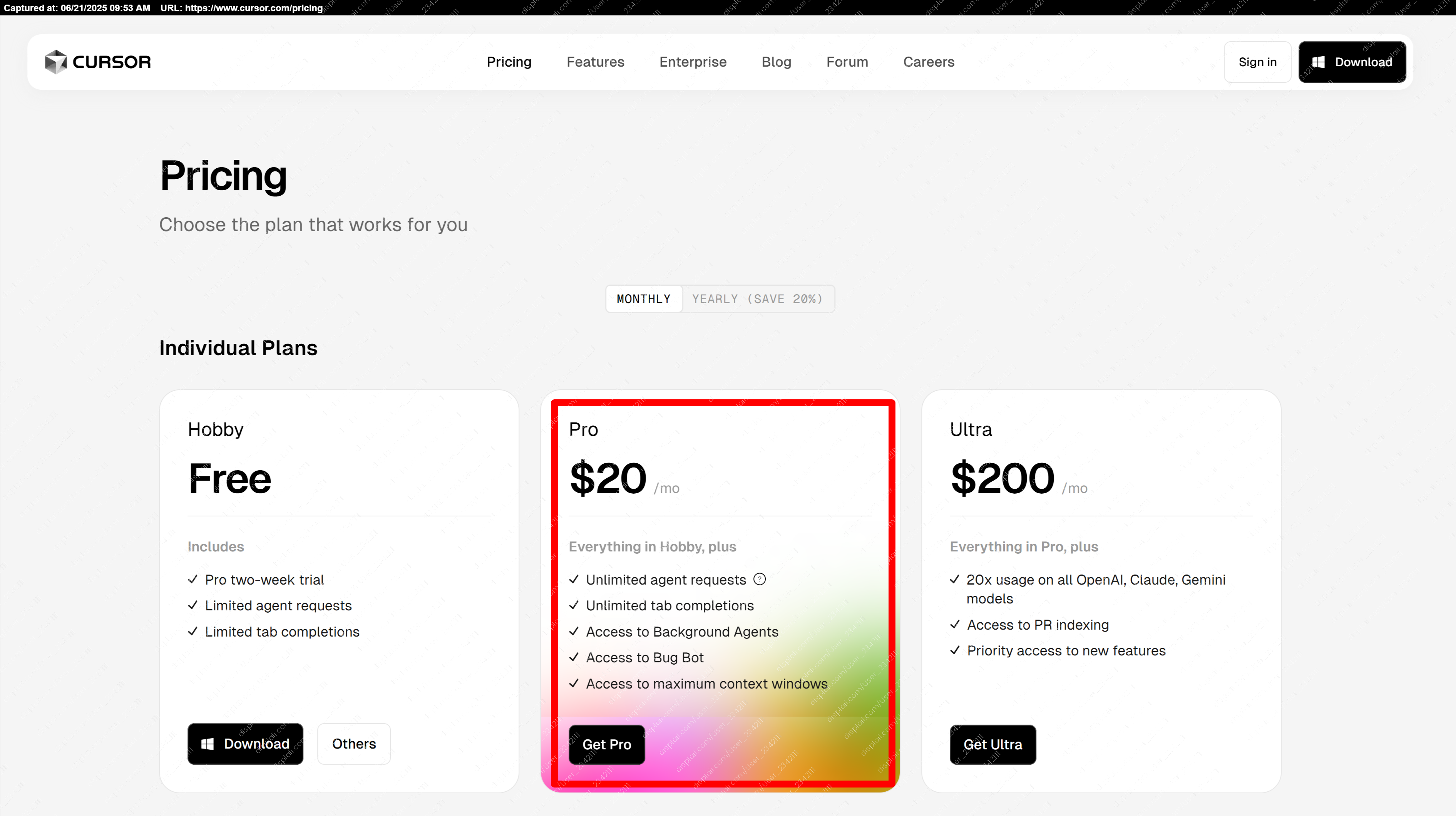

So Augment offers a Context Engine, MCP integrations, Persistent Memory and more ... Try it here its free (for about 50 messages a month ...now where have l heard of that huh Cursor and Windsurf ???)

https://www.augmentcode.com/

And the announcmeent made in April this year is in this article here:

https://www.augmentcode.com/blog/meet-augment-agent

#augment #augmentcode #contextengine #mcp #persistentmemory #aicoding #aidevelopment #cursor #windsurf #codecompletion #ai #codingtools #softwaredevelopment #codingassistant #freecodingtools

https://www.augmentcode.com/

And the announcmeent made in April this year is in this article here:

https://www.augmentcode.com/blog/meet-augment-agent

#augment #augmentcode #contextengine #mcp #persistentmemory #aicoding #aidevelopment #cursor #windsurf #codecompletion #ai #codingtools #softwaredevelopment #codingassistant #freecodingtools

So Augment offers a Context Engine, MCP integrations, Persistent Memory and more ... Try it here its free (for about 50 messages a month ...now where have l heard of that huh Cursor and Windsurf ???)

https://www.augmentcode.com/

And the announcmeent made in April this year is in this article here:

https://www.augmentcode.com/blog/meet-augment-agent

#augment #augmentcode #contextengine #mcp #persistentmemory #aicoding #aidevelopment #cursor #windsurf #codecompletion #ai #codingtools #softwaredevelopment #codingassistant #freecodingtools

0 Comments

·0 Shares

·239 Views